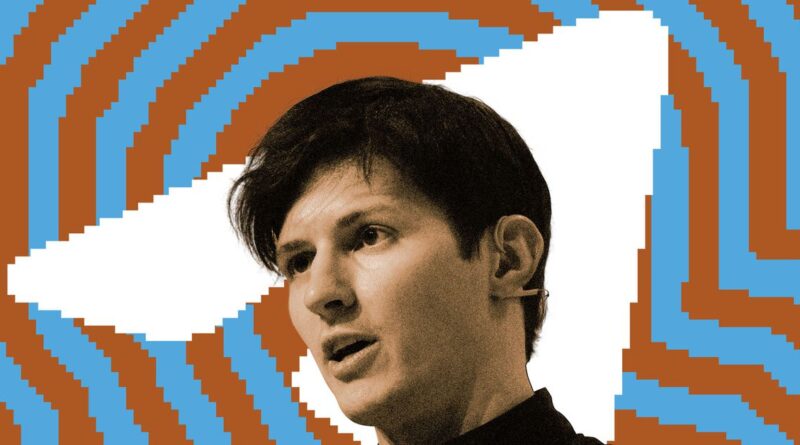

Why the Telegram CEO’s arrest is such a big deal

Telegram CEO Pavel Durov’s arrest in France on Saturday took the tech world by surprise. The 39-year-old Russian-born billionaire was detained after touching down at an airport outside of Paris in his private plane. And with scant detail available, observers wondered what the unprecedented action meant for free speech, encryption, and the risks of running a platform that could be used for crime.

On Monday, French officials revealed that Durov is being questioned as part of a wide-ranging criminal investigation surrounding crimes that regularly happen on Telegram. While some of the accusations could still raise red flags, many seem to concern serious offenses — like child abuse and terrorism — that Durov would reasonably have been aware of. But many questions remain unanswered, including how worried other tech executives should be.

Crime happens on lots of platforms. Why does Telegram stand out?

Telegram is a messaging app that was founded in 2013 by brothers Pavel and Nikolai Durov. While it’s sometimes portrayed as an “encrypted chat app,” it’s mostly popular as a semi-public communication service like Discord, particularly in countries like Russia, Ukraine, Iran, and India.

It’s a massive platform that is used by millions of innocent people every day, but it’s also gained a reputation for being a safe haven for all sorts of criminals, from scammers to terrorists.

Pavel Durov has crafted a brash pro-privacy persona in public. In an interview with Tucker Carlson this year, Durov gave examples of times that Telegram has declined to hand over data to governments: when Russia asked for information on protesters, for instance, and when US lawmakers requested data on participants in the January 6th Capitol riot. Earlier, at a 2015 TechCrunch event, Durov said that Telegram’s commitment to privacy was “more important than our fear of bad things happening, like terrorism.”

That sentiment isn’t radically out of step with what many encryption proponents believe, since strong encryption must protect all users. A “backdoor” targeting one guilty party compromises everyone’s privacy. In apps like Signal or iMessage, which use end-to-end encryption, nobody but the sender and recipient can read a message’s contents. But as experts have pointed out, Telegram doesn’t implement this in any meaningful sense. End-to-end encryption has to be enabled manually for one-on-one messaging, and it doesn’t work for group chats or public channels where illegal activity occurs in plain view.

“Telegram looks much more like a social network that is not end-to-end encrypted,” John Scott-Railton, senior researcher at Citizen Lab, told The Verge. “And because of that, Telegram could potentially moderate or have access to those things, or be compelled to.”

The ecosystem of extremist activity on the platform is so well-known that it even has a nickname: “terrorgram.” And much of it happens in the open where Telegram could identify or remove it.

Telegram does occasionally take action on illegal content. The platform has blocked extremist channels after reports from the media and revealed users’ IP addresses in response to government requests, and an official Telegram channel called “Stop Child Abuse” claims that the platform blocks more than 1,000 channels engaged in child abuse every day in response to user reports.

But there have been numerous reports of lax moderation on Telegram, with its general approach being frequently described as “hands off” compared to competitors like Facebook (which still struggles to effectively moderate its own massive platform). Even when Telegram does take action, reporters previously discovered that the service may only hide the offending channels rather than block them.

All of this puts Telegram in a unique position. It’s not taking a significantly active role in preventing use of its platforms by criminals, the way most big public social networks do. But it’s not disavowing its role as a moderator, either, the way a truly private platform could. “Because Telegram does have this access, it puts a target on Durov for governmental attention in a way that would not be true if it really were an encrypted messenger,” said Scott-Railton.

Why was Pavel Durov arrested? And why were other tech executives upset?

According to a statement by French prosecutor Laure Beccuau, Durov is being questioned as part of an investigation on Telegram-related crimes, which was opened on July 8th.

The listed charges include “complicity” in crimes ranging from possessing and distributing child sexual abuse material to selling narcotics and money laundering. Durov is also being investigated for refusing to comply with requests to enable “interceptions” from law enforcement and for importing and providing an encryption tool without declaring it. (While encrypted messaging is legal in France, anyone importing the tech has to register with the government.) He’s also accused of “criminal association with a view to committing a crime” punishable by more than fine years in prison. The statement added that Durov’s detainment could last 96 hours, until Wednesday, August 28th.

When Durov was first taken into custody, though, these details weren’t available — and prominent tech executives immediately rallied to his defense. X owner Elon Musk posted “#FreePavel” and captioned a post referencing Durov’s detention with “dangerous times,” framing it as an attack on free speech. Chris Pavlovski, CEO of Rumble — a YouTube alternative popular with right-wingers — said on Sunday that he had “just safely departed from Europe” and that Durov’s arrest “crossed a red line.”

Durov’s arrest comes amid a heated debate over the European Commission’s power to hold tech platforms responsible for their users’ behavior. The Digital Services Act, which took effect last year, has led to investigations into how tech companies handle terrorism and disinformation. Musk has been recently sparring with EU Commissioner Thierry Breton over what Breton characterizes as a reckless failure to moderate X.

Over the weekend, the public response was strong enough that French President Emmanuel Macron issued a statement saying that the arrest took place as part of an ongoing investigation and was “in no way a political decision.” Meanwhile, Telegram insisted that it had “nothing to hide” and that it complied with EU laws. “It is absurd to claim that a platform or its owner are responsible for abuse of that platform,” the company’s statement said.

Is the panic around Durov’s arrest justified?

With the caveat that the situation is still evolving, it seems like free speech is not the core issue — Durov’s alleged awareness of crimes is.

In posts on X, University of Lorraine law professor Florence G’sell noted that the most serious charges against Durov are the ones alleging direct criminal conspiracy and a refusal to cooperate with the police. By contrast, the charges around declaring encryption tech for import seem like minor offenses. (Notably, in the United States, certain import / export controls on encryption have been found to be violations of the First Amendment.) G’sell noted that there are still unknowns surrounding which criminal codes Durov could be charged under but that the key issue seems to be knowingly providing tech to criminals.

Arguably, Telegram has long operated on a knife-edge by attracting privacy-minded users — including a subset of drug dealers, terrorists, and child abusers — without implementing the kind of robust, widespread encryption that would indiscriminately protect every user and the platform itself. If child abuse or terrorism is happening in clear view, platforms have a clear legal responsibility to moderate that content.

That’s true in the US as well as in Europe. Daphne Keller, platform regulation director of the Stanford Cyber Policy Center, called Durov’s arrest “unsurprising” in X posts and said it could happen under the US legal system, too. Failing to remove child abuse material or terrorist content “could make a platform liable in most legal systems, including ours,” she wrote. Section 230, which provides a broad shield for tech platforms, notably doesn’t immunize operators from federal criminal charges.

That said, there are still many unknowns with Durov’s arrest, and there may be further developments that justify some of the concern over implications for encryption tech. References to lawful “interceptions” — a term that typically refers to platforms facilitating surveillance of users’ communications — are particularly worrying here.

European and US police have increasingly targeted encrypted chat platforms used by criminals in recent years, hacking a platform called EncroChat and even going as far as to secretly run an encrypted phone company called Anom. Notably, those platforms were focused on serving criminals. Telegram, on the other hand, is aimed at the general public. In his interview with Carlson, Durov claimed that at one point, the FBI — which played a key role in the Anom operation — attempted to convince Telegram to include a surveillance backdoor.

“This case definitely illustrates — whatever you think about the quality of Telegram’s encryption — how many people care about the ability to communicate safely and privately with each other,” said Scott-Railton.

Durov’s arrest also raises the question of what should push a platform into legal liability. Serious crimes certainly occur on Facebook and nearly every other massive social network, and in at least some cases, somebody at the company was warned and failed to take action. It’s possible Durov was clearly, directly involved in a criminal conspiracy — but short of that, how ineffectual can a company’s moderation get before its CEO is detained the next time they set foot on European soil?